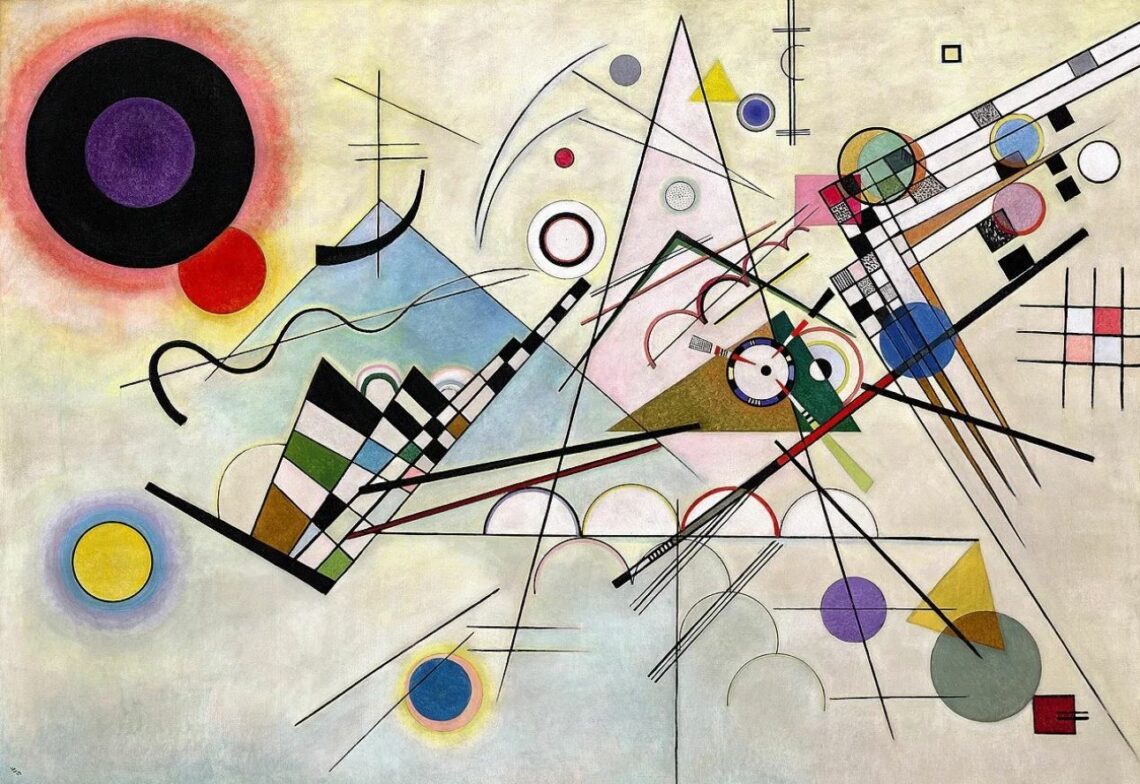

Let’s start—because apparently we have to—with a question lounging somewhere at the bottom of the rabbit hole, casually waving us toward a Wonderland that’s probably less magical and more algorithmically disturbing: What even is knowledge anymore?

In a world where ChatGPT can spit out your dissertation in the time it takes you to open a new Google Doc, what’s the actual value of knowing something? Or more precisely, what does it mean to know anything?

We’ll be taking the scenic route—complete with unnecessary tangents, half-researched detours into historical trivia no one asked for, the occasional emotional overshare tucked safely inside parentheses, and a generous helping of unverified hypotheses that, frankly, I’m not convinced I want to think about, let alone inflict on you.

This is the first in a three-part series on what knowledge means in the age of AI—past, present, and hypothetical-possibly-dystopian-but-maybe-beautiful future. Apologies in advance for the length. This was supposed to be a Twitter thread. It turned into something that requires a cup of coffe, a deep breath, and possibly a spiral-bound notebook to track your feelings.

But then again, maybe—just maybe—this topic deserves more than 280 characters and a meme. And here are three reasons why:

Because knowledge used to be a sacred thing. People died for it. Or at least lost sleep, eyesight, and the better part of their 30s in university libraries lit like interrogation rooms. It seems worth taking a few extra paragraphs to mourn and/or examine that.

Because the machines are catching up. And if we don’t stop to ask what makes human knowledge human, we may find ourselves living in a world where all the answers are right, and none of them matter.

Because the transformation of knowledge from a lifelong pursuit into an infinitely scrollable commodity is not just interesting—it’s kind of the whole story of our era. It’s the plot twist we’re living through. You can’t do that justice with a hot take and a GIF of a raccoon using a smartphone.

That said—because ambivalence is honest, and attention spans are mortal—here are three reasons why you maybe shouldn’t be bothered:

Because thinking about knowledge makes you feel weirdly unqualified to possess it. The deeper you go, the more impostor syndrome kicks in like a bad roommate. (“Who am I to ask epistemological questions when I just Googled how to spell ‘epistemological’?”)

Because you’ve got emails to answer, laundry to do, and a half-watched documentary about the algorithmic collapse of attention to finish watching. You’re busy. We all are. The irony is baked in.

Because deep down, you suspect the machines will figure it all out anyway. And maybe all we’re doing here is offering a sentimental soliloquy while the orchestra of automation swells to full volume behind us.

But still. We begin.

Because there’s something stubborn, maybe even noble, about thinking hard in an age built to make that inconvenient. About trying to say something real in a medium optimized for hot takes and shrugging emojis. About taking knowledge seriously—even as it becomes slippery, remixable, increasingly unmoored from the slow, intimate act of living it.

So yeah. This one ran long.

It’s the first part of a series. Three parts, probably. Unless we get derailed. Or enlightened. Or both.

Part 1

Classical Knowledge: When Knowing Things Was Labor (and Glory and Guts and, Occasionally, Cholera)

Once upon a time—and not in the cutesy, pastel-colored, Pixar-soundtracked sense but in the crushing, “I spent three years sailing to Alexandria just to read a scroll” sense—knowledge was the result of sweat. Literal, back-breaking, candle-burning, possibly-scarring physical labor.

We’re talking about a world where remembering things accurately was a high art. Where mnemonic systems were considered divine gifts, and oral traditions weren’t just cultural but existential. Entire tribes survived because some poor guy could chant genealogies backwards during a famine.

Think medieval monks, eyes bleeding from squinting at vellum, hand-copying Cicero in silence for thirty years because there were no Xerox machines and also God was watching. Think scribes in Alexandria debating whether a papyrus scroll was worth stealing from a visiting Phoenician ship captain. Think astronomers like Tycho Brahe who, despite being a data-obsessed nobleman with a gold nose, literally died from a burst bladder because he couldn’t bring himself to interrupt a royal dinner to urinate—that was the level of dedication to gathering planetary data.

In other words: knowledge used to hurt.

And yet, it was also sacred. Classically—Plato, Aristotle, Aquinas if you’re Catholic, Maimonides if you’re not—knowledge was defined as justified true belief. You didn’t just believe something because a guy in sandals said it confidently on a forum. You needed it to be true, and you needed good reasons. You had to defend it. Debate it. Cross-examine it with syllogisms, ethics, and metaphysics—like some early version of peer review, minus the journal subscriptions and LinkedIn humblebrags.

More importantly, knowledge wasn’t just abstract—it was the foundation of your entire social mobility apparatus. Knowing Latin could catapult you from pig-herder to papal advisor. Knowing mathematics could get you court access in Baghdad. And knowing the right winds, stars, and tides could help you navigate the Indian Ocean and return rich with pepper and morally dubious glory.

It wasn’t just about what you knew, but who knew that you knew it. There were guilds for knowledge. Oaths. Secrets. Rituals. A person with knowledge—real, hard-won, earned-through-the-knuckles knowledge—could become a scholar, a priest, an engineer, or a revolutionary. You could topple kings or build cathedrals. You could also be burned at the stake, which was sort of the Yelp review of pre-modern epistemology.

The classical world treated knowledge the way we now treat Bitcoin or Taylor Swift tickets: scarce, volatile, and capable of generating immense status if you held the right keys.

Knowledge used to be rare, dangerous, and deeply embodied. You got it through labor. You kept it through discipline. And if you were lucky, you profited from it—sometimes spiritually, sometimes financially, often both.

And all of this mattered, deeply, because the number of people who could even access knowledge was minuscule. Which meant that if you had it, you didn’t just possess facts—you possessed leverage. Influence. The power to change how people saw the world, or how the world saw itself.

But that was then. And then we invented the transistor.

20th Century Knowledge: Ctrl+F Enters the Chat, or the Century We Outsourced Our Minds to Machines

Then, computers happened.

Well, first Charles Babbage happened, in that slow-burn, Victorian polymath way that only involved partial builds, theoretical blueprints, and copious amounts of tea. Then Ada Lovelace happened, technically first in terms of understanding software, but history being what it is—she was largely ignored until the 1980s when cyberfeminism became a thing. Then Alan Turing happened, who pretty much invented the idea that thinking could be mechanized, or at least convincingly simulated, while also being chemically castrated by the state for being gay, which no one wants to talk about when naming high schools after him.

Then came the coldly efficient, bespectacled onslaught of postwar engineers: IBM guys with plastic pens in their shirt pockets, speaking in acronyms, building monstrous machines that required punch cards and chilled rooms and a level of math competency most of us would now outsource to Wolfram Alpha.

And the important thing here—perhaps the tectonic thing—is that the locus of knowledge moved. Like, literally moved. Out of our heads, our notebooks, our libraries, our coffee-fueled collegiate seminars, and into machines. Externalized.Offloaded. Like luggage or trauma.

Suddenly, knowledge wasn’t about remembering anymore. It was about retrieving. Knowing where something lived became more important than actually holding it in your brain. This was a philosophical shift so profound it should’ve come with its own Gregorian chant and United Nations resolution.

Access replaced retention.

Imagine this: your grandparents memorized multiplication tables under the threat of corporal punishment. You, on the other hand, Google “long division” while watching a TikTok of someone cleaning a rug. This is not a moral failing—it’s just the new epistemological weather.

By the mid-to-late 20th century, computers weren’t just glorified calculators. They were memory devices. Information structures. The Dewey Decimal System gave way to the hyperlink. The archive gave way to the database. Authority—the scholarly, tweed-wearing kind—began to erode as the query replaced the thesis as the foundational unit of knowledge.

Think about it: Cold War scientists weren’t just racing the Soviets in terms of bombs or satellites. They were racing to know more faster. This is the age when metadata—the data about data—became just as valuable as the data itself. You didn’t need to know what—you needed to know how to search for what, and how to filter it once it arrived.

This gave rise to what corporate HR departments now euphemistically call “knowledge workers.” Which sounds noble and intellectual, but mostly means people who sit in open-plan offices toggling between Excel, Slack, and three Chrome tabs they forgot to close.

Entire industries emerged not around knowledge creation, but knowledge management. Filing systems. Knowledge bases. Intranets. Wikipedia. PowerPoint decks filled with bullet points masquerading as insights. Meanwhile, academic publishing turned into a multi-billion-dollar empire where knowledge was so expensive it became functionally inaccessible. Which is kind of poetic, if you’re into irony.

Knowledge, in the 20th century, became infrastructure.

Like roads or plumbing. Invisible unless it breaks. And the scary thing? This was seductive. Because it worked. It was easier. It felt modern. Why memorize the Periodic Table when you can just Ctrl+F your way through a PDF? Why study five years of jurisprudence when you can just ChatGPT your way through the difference between stare decisis and res ipsa loquitur?

But this outsourcing came at a cost. And not just the monthly fee for Microsoft 365.

As we got better at organizing information, we got worse at sitting with it. As access got faster, depth got shallower. As storage grew, interpretation shrank. We were flooded with more data than at any point in human history, but somehow the signal-to-noise ratio plummeted. Knowing became something you simulated for an audience—think TED Talks, LinkedIn posts, Medium articles with titles like “5 Ways to Hack Your Brain into Reading More Nietzsche.”

And let’s not forget the anxiety. The low-grade epistemic dread that everyone else knows something you don’t, and they have the podcast to prove it.

So yes, the 20th century did, in a sense, democratise knowledge—spread it around like margarine across the scorched toast of modern life, gave (almost) everyone with a library card or a blinking dial-up connection the theoretical keys to the kingdom. But in the process, it also atomized it. Pulverized it into bite-sized bits. Knowledge became searchable, yes, but also strangely disposable—less meaningful, less embodied, less ours. Like it no longer required skin in the game, or time, or doubt, or failure, or even much attention. You just needed a password.

And somewhere—not quite at the surface, not exactly in the gut either, but somewhere between the default-mode network and the place in your brain that remembers the smell of school hallways—we feel it. This flickering sense that something isn’t quite aligned. That we’ve slipped into a version of reality that is always available, always shimmering with information, always prompting us to know more and more while, paradoxically, feeling less and less known by any of it.

We scroll and we search and we collect tabs like digital squirrels hoarding acorns of insight we’ll never crack open. And still it grows. The abundance. The overload. The sheer, galloping accumulation of things to know. And no one—not your favorite productivity guru, not the 2x speed podcast host, not even your browser history—can tell you how much of it actually matters.

And so we keep going—half-curious, half-anxious—through this flood of ambient knowing, hoping maybe, just maybe, there’s still something on the other side worth calling knowledge.

To be continued...

(Assuming I don’t get distracted by a notification, a revelation, or the scent of existential toner.)

Make the first step and enjoy the journey!

- No limited trial period

- No upfront payment

- No automatic renewal

- No hidden costs