Part 2

Fast forward to now.

You’re reading this on a screen—phone, tablet, dual-monitor setup, smart fridge—possibly one recommended to you by an algorithm that already knows your preferred font size, resting heart rate, and how much doomscrolling it takes before you order Thai food. You’ve maybe asked ChatGPT to rewrite an awkward email to sound “more confident but not aggressive,” or to draft Python code that you will absolutely not test yourself, or to explain Wittgenstein’s “beetle in a box” thought experiment as if you were a kindergartener with tenure.

This is where knowledge becomes something… else.

Not quite information. Not quite wisdom. Not even insight, per se. Something more performative. More aesthetic. Something a bit like what Susan Sontag meant when she said style is everything—only now, “style” is statistically modeled and rendered on demand by a machine that’s been trained on everything from the King James Bible to Taylor Swift’s Reddit fan theories.

Because here’s the thing about generative AI: it doesn’t know things in the way that we once meant that term. It doesn’t “understand” in the deep, human, phenomenological sense of aha. It doesn’t feel the emotional texture of irony, or the slow, stomach-sinking realization that you were wrong about something fundamental. What it does do, terrifyingly well, is simulate the rhetorical shape of knowing. It mimics coherence. It replicates confidence. It takes probabilistic guesswork and dresses it up as intellectual fluency.

Generative AI is the karaoke machine of knowledge—only it sounds exactly like Adele.

It’s not just an encyclopedia, or even a search engine. It’s a ghostwriter, a tutor, a marketing strategist, a motivational coach, a late-night philosophy buddy who never gets tired or bored or distracted by their own childhood trauma.

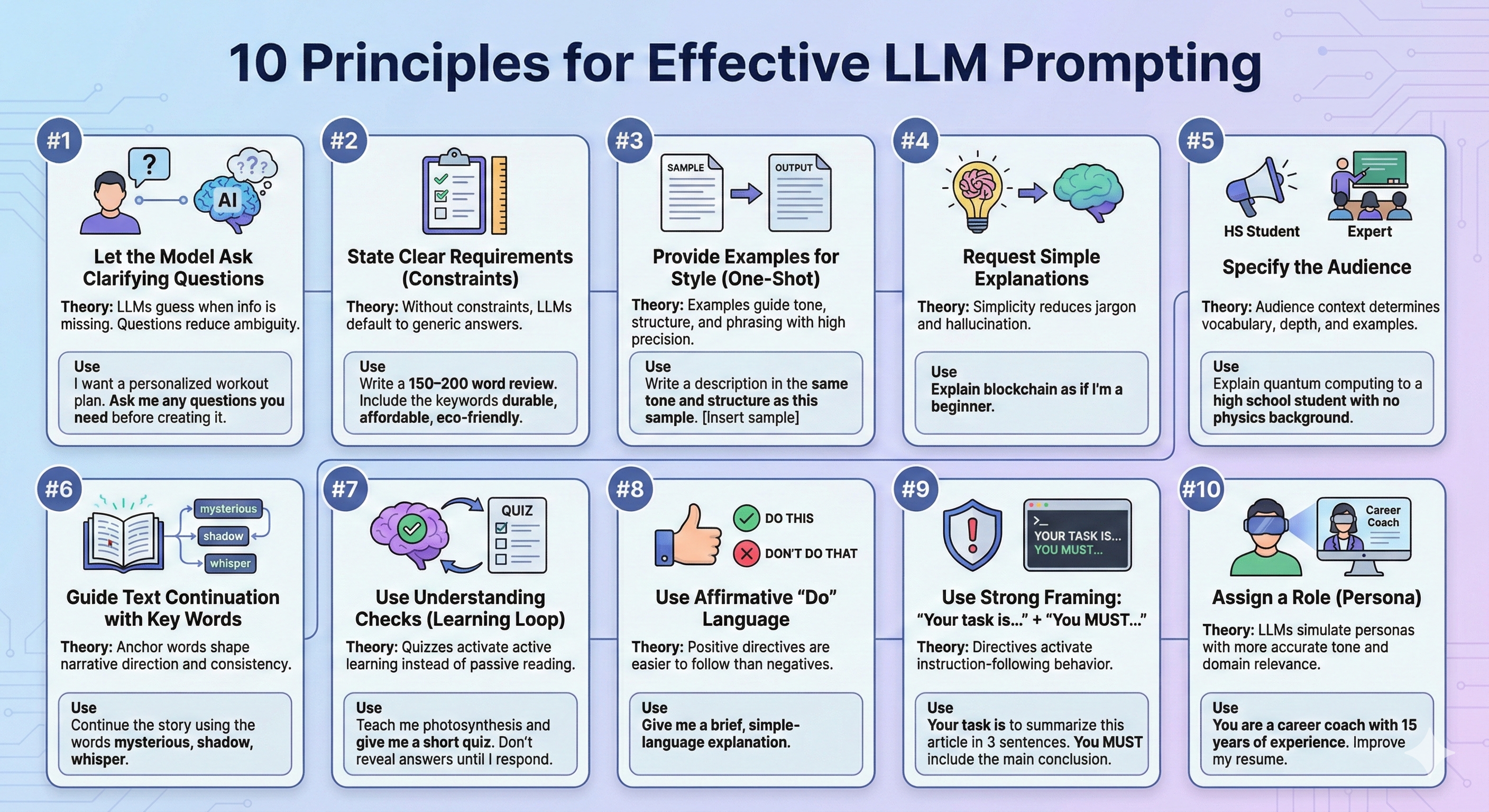

And because of this, the center of gravity shifts: knowledge is no longer just something you possess. It’s something you orchestrate. It becomes about prompt design, framing, positioning, vibe.

Do you know something? Or do you know how to get an LLM to sound like it knows it for you?

That’s the new epistemological currency. Not facts. Not logic. But fluency in interaction. Skillful manipulation of a knowledge interface that feels like intelligence but is really just a high-functioning parrot with a master’s in semiotics and zero self-awareness.

In short: knowledge is becoming interface. Mediation. Performance.

And this isn’t necessarily bad, though it does feel vaguely disorienting, like watching a deepfake of your own internal monologue.

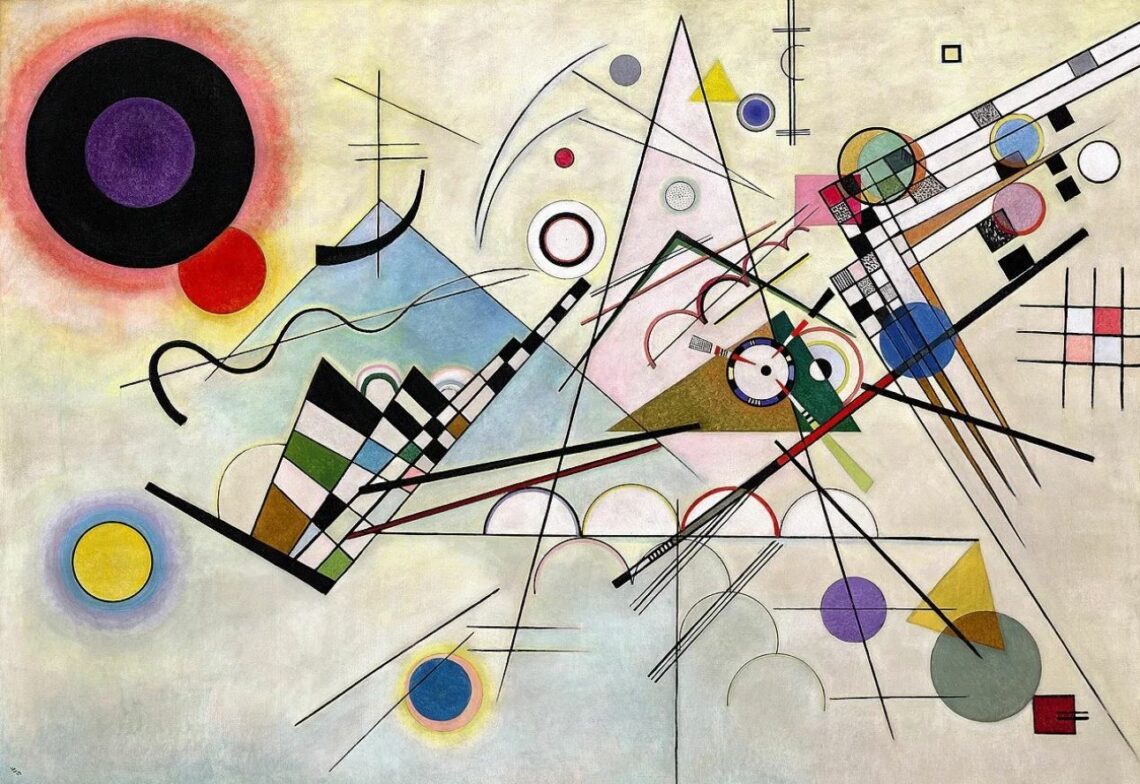

Because what’s actually valuable in the age of generative AI is no longer what you know, but how you remix it. The aesthetic patterning. The semantic fingerprint. The way you shape ideas into narratives, questions into architecture, prompts into prose that lands. This is the new intellectual craftsmanship. The move from content to contour.

We’ve entered the era of post-epistemic poetics—which sounds pretentious, because it is. But also kind of accurate. The meaning isn’t in the data. It’s in the angle. The ability to make knowledge feel meaningful in a world drowning in well-formatted text.

It’s not about whether you can recite the periodic table—it’s about whether you can make the periodic table go viral on TikTok. It’s not about understanding Derrida—it’s about getting ChatGPT to explain différance using pizza metaphors and then editing it into a three-minute animated explainer with ironic sound design.

This is what we (still) do better than machines. For now.

What’s Missing Isn’t Data—It’s Doubt

while AI can generate language that’s eerily plausible—so plausible, in fact, that it begins to sound suspiciously like authority—it still falters when it comes to intention. Not the linguistic mimicry of intent, which it’s mastered with frankly alarming skill, but the real thing: the messy, inconsistent, limbic-rooted process of caring about something. Of saying, “This, not that,” not because the data weighted it that way, but because it matters to me—because I’m staking some sliver of my identity, belief, or vulnerability on it.

AI doesn’t care. It can’t. It can’t choose to care, which is arguably what caring is. It doesn’t know what it means to tell one story over another, not because it’s more popular or better optimized for engagement metrics, but because it contains a difficult truth. It doesn’t risk being wrong. It doesn’t feel shame or hesitation or that uniquely human vertigo of doubt before you hit publish. It never lays anything on the line.

And that’s why, for now, the most valuable knowledge isn’t raw information—it’s stylized consciousness. Which is to say: knowledge that’s been metabolized by a human nervous system, filtered through memory and emotion and bias and ethics and whatever ambient psychological noise constitutes you. Knowledge that bears fingerprints. That sweats a little. That reveals the contours of a mind grappling with something real.

Stylized consciousness is not objectivity. It’s the opposite. It’s the knowing that shows its seams. It’s knowledge that acknowledges its own subjectivity—“Here’s how I know this, why I think it matters, and why it hurts (or thrills or haunts or saves) me to say it out loud.”

Because in the age of generative AI, the question is no longer can this be said, but who is saying it, and why? The content, increasingly, is interchangeable. The voice, the texture of the mind behind it—that’s what’s scarce now.

In the age of generative AI, knowledge becomes less about “knowing” and more about rendering—which is to say, about the stylization of thought. Not just what you say, but how you say it, how artfully, how courageously, how much of yourself you’re willing to leak into the syntax. Welcome to epistemology-as-service, where insight is UX and the soul is the new differentiator.

And this—this stylized, postmodern, prompt-optimized remix of knowledge we now perform like a TED Talk with good lighting—feels, for a moment, like the endgame. As if meaning itself has become aesthetic, and understanding is just UX for thought. And maybe that’s fine. Maybe that’s just what knowledge looks like now: streamed, skimmable, emotionally branded. But somewhere in the margins, a different question is loading. One we’re not quite ready to click. Because behind the curated fluency of generative AI, there’s something deeper beginning to stir—something more autonomous, more unbounded, less interested in collaboration than in cognition. The next shift isn’t stylistic—it’s structural. Not a better mirror, but a mind. And if we’re honest, we don’t know whether to feel awe or fear. Or both. But the ground is moving. And the next step, if we’re still willing to take it, might change what knowledge is all over again.

To be continued...

(Provided I’m not pulled into a Slack thread, a mid-sentence identity crisis, or the faint smell of philosophical burnout.)

Make the first step and enjoy the journey!

- No limited trial period

- No upfront payment

- No automatic renewal

- No hidden costs