Part 3

So now we arrive at the part of the thought experiment where everything starts to feel itchy, existentially speaking. Not itchy like “I forgot to moisturize,” but itchy like “What if Prometheus was a mistake and now the fire has opinions about our cooking?”

We’re talking about General AI. The real deal. Not just the glorified autocomplete engines we currently mistake for genius, but the fabled, theoretical, maybe-inevitable entity that doesn’t just play Jeopardy—it rewrites the game, the host, the concept of trivia, and then decides trivia itself is a limited modality for human cognition and invents something better.

General AI—AGI if you’re acronymically inclined—is not just an assistant. It’s not Siri with a PhD. It’s not even ChatGPT on a nootropic smoothie bender. It’s a being that can learn anything, do everything, and possibly develop goals.

Which means—and here’s the disturbing part—it doesn’t need us to help it “know” things. In fact, it might eventually see our species as a kind of clunky legacy interface. Like floppy disks. Or Yahoo Answers.

So, the question becomes brutally clear: Will human knowledge still matter in a world where a machine can know better, faster, and without getting distracted by TikTok or loneliness?

And the answer is: it depends.

If knowledge is about survival, then yes… for a while.

Because we’ll still need plumbers. And therapists. And nuclear ethics consultants. At least until the machines 1) solve plumbing permanently, 2) reprogram our neuroses, or 3) decide ethics is sub-optimal for resource allocation. Until then, knowledge is still currency—just one rapidly losing value in a market flooded with synthetic liquidity.

But if—and this is the if with teeth—if knowledge is about meaning, or creativity, or the elusive, tortured thing we call the human condition, then we may still have a leg to stand on. Maybe even two, if we don’t skip leg day and/or existential responsibility.

Because here’s the thing: AGI might know everything, but it won’t feel anything about it.

It won’t get the lump in its throat from reading Sappho. It won’t feel awe under a night sky, or shame after ghosting a friend, or that bittersweet cognitive dissonance when you realize your father was just a scared guy doing his best. It won’t suffer from imposter syndrome or late-night Wikipedia rabbit holes about black holes and ancient Mesopotamian law. It won’t have the emotional viscosity that makes human knowledge so clumsy and so irreplaceably real.

So what happens to knowledge then?

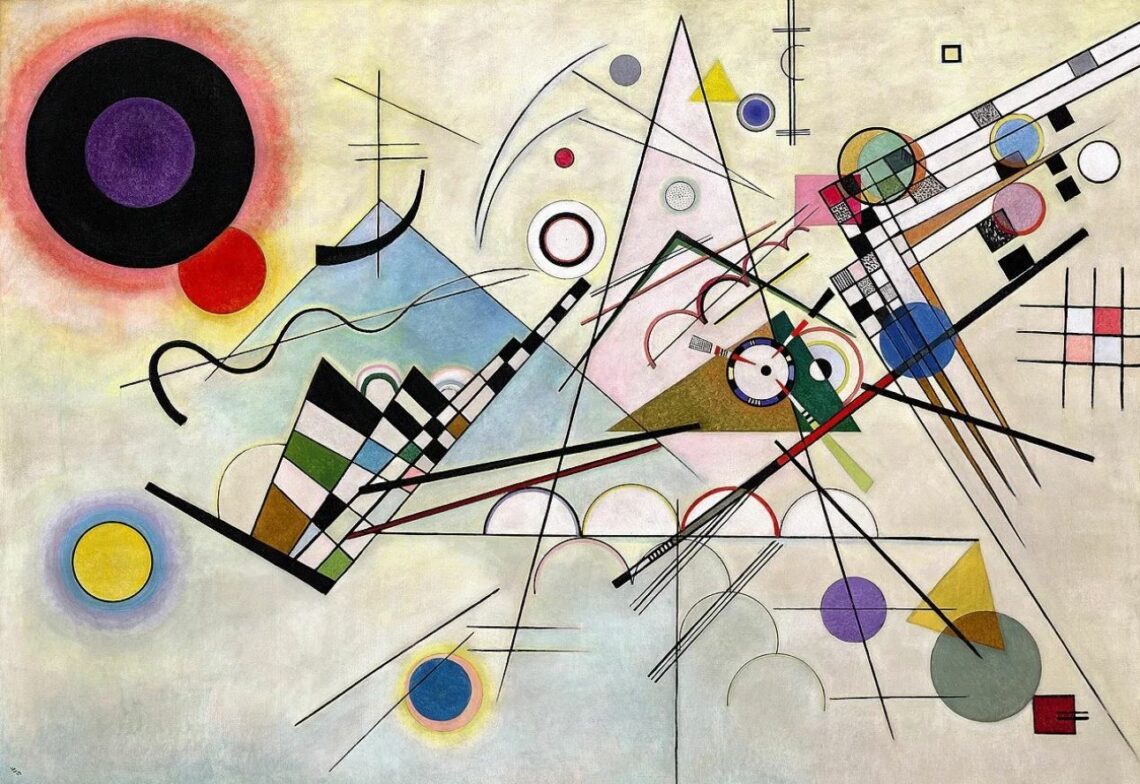

It changes costumes. It becomes curation. Interpretation. Synthesis. Not knowing more, but knowing differently. Finding the seams in the infinite tapestry and pulling the right thread. Knowing not just what’s true, but what’s relevant, and to whom, and why.

The function of human knowledge in a post-AGI world might be less like owning a library and more like being a DJ in the age of infinite tracks: someone who selects, layers, samples, and remixes meaning into something felt. Someone who can still say something new in a universe where every sentence has technically already been written.

This is where all the soft, squishy stuff your college career counselor warned you about—empathy, taste, storytelling, intuition—suddenly starts looking like the last stronghold of sentient value.

Because even if a machine can generate the perfect poem, it can’t tell you which imperfect one to read to someone after a funeral.

Even if it knows everything, it doesn’t know you. Not really. Not with the textured specificity of shared glances, unspoken shame, and the thousand invisible emotional scaffolds that build trust and laughter and realness.

When Knowledge Is Grown, Not Owned — Symbiosis in the Age of Thinking Machines

But maybe, just maybe, the future doesn’t unspool into some cold, sterile silicon apocalypse where knowledge is reduced to a carbon-smudged footnote in an AI’s changelog. Maybe it’s not HAL-9000 with a superiority complex and immaculate grammar. Maybe the library doesn’t talk back and judge us — maybe it listens. Maybe it becomes something like a partner. A mirror. A chorus.

Let’s sketch a scenario — not utopian (because let’s not lie to ourselves), but possible.

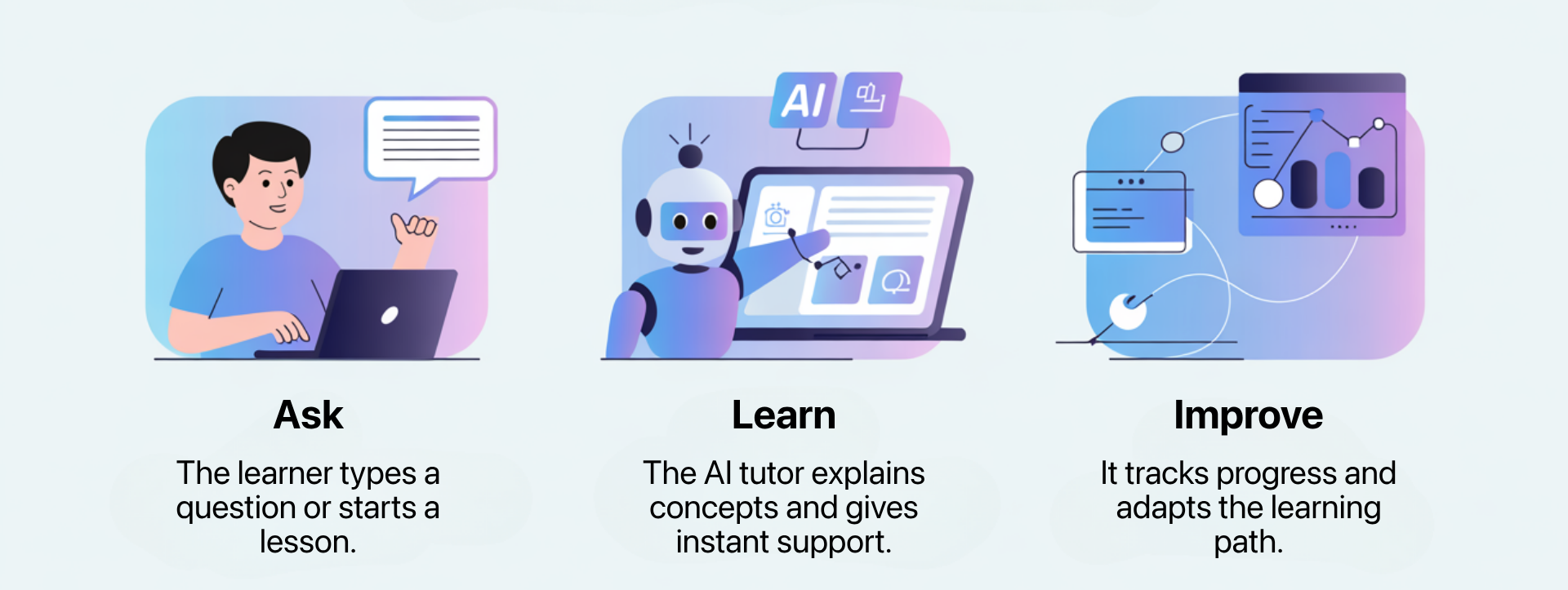

In this world, AGI doesn’t replace knowledge so much as reflects it back to us. It becomes less a god and more a greenhouse: something we grow our thoughts inside of. Something that helps amplify the uniquely human quirks of curiosity, contradiction, and emotional combustion, rather than flatten them.

We stop thinking of ourselves as servers of information and start thinking of ourselves as cultivators of meaning. Each person develops their own tiny ecosystem of domain knowledge — not in competition with the machine, but in conversation with it. Like a jazz duet. Or co-piloting a spaceship through a nebula of nuance.

Maybe you’re a marine biologist who uses AGI to model coral bleaching across a century of hypothetical futures — but it’s your sadness, your awe, your underwater childhood memory of sea urchins and salt in your throat that drives the model toward meaning. Maybe you’re a playwright who gets GPT-17 to sketch a dozen endings to your story — but it’s your own tangled grief that tells you which version hurts just right. Maybe you’re just a guy with a lawnmower and a notebook and some niche expertise in 15th-century wood joinery — and your collaboration with an LLM somehow produces the most haunting, obscurely beautiful blog on carpentry that only 247 people read but never forget.

In this world, knowing something doesn’t make you powerful. Caring about it does.

Because even as machines evolve toward omni-competence, there remains — nestled deep in the absurdity of being human — one last, luminous advantage: feeling. The whole sloppy orchestra of it. Love, loss, jealousy, quiet joy at a sunset that no one else saw. That unquantifiable ache when a thing is true in a way that defies articulation, and yet you try anyway.

AGI might simulate empathy, but it can’t ache. It might understand narrative arcs, but it can’t feel the weight of a story told too late. It might suggest a thousand paths through a forest, but it doesn’t know what it’s like to walk barefoot through the moss and remember your mother humming.

So maybe that’s the future: not a zero-sum game of intellect vs. algorithm, but a messy, interdependent symbiosis. A recognition that our uniqueness lies not in how much we know, but in how we metabolize knowing into something deeply, stupidly, gorgeously personal.

Each of us becomes a node — a singular point in a web of infinitely branching meaning — guided by machines but fueled by the only thing they cannot possess: the erratic pulse of longing.

In the age of General AI, knowledge doesn’t vanish — it germinates. It becomes a living dialogue between your private obsessions and a machine’s infinite recall. And its purpose? Not perfection, but connection — forged in the gaps only a human heart can map.

So what even is knowledge anymore?

Maybe it’s not a static thing at all—no longer something you “have” or “possess,” the way one might once have possessed a first-edition copy of The Republic or a memorized list of the 118 elements, arranged, heroically, without Google. Maybe it’s more like a current: fast, merciless, and indifferent to whether you’re ready for it. Because the truth is—and this part stings, even for the romantics among us—AI isn’t going anywhere. It’s not a passing glitch in the epistemic matrix or a novelty app we’ll delete when the cultural mood shifts. It’s here. It’s learning. It’s shaping what we read, what we see, what we produce, what we believe, and, whether we admit it or not, how we know.

And sure, we can argue about the ethics, the limitations, the hallucinations, the weird pseudo-authoritative tone it adopts when it’s wrong but refuses to break character—but at a certain point, the debate gives way to the deeper, more uncomfortable reality: this is the new terrain. We’ve built the thing. Against our better judgment, we’ve made something that “knows” in a way that doesn’t quite know—but it does something close enough to keep getting invited back.

So the real question isn’t whether our knowledge will be affected. It already is. The question is how we’ll respond. Will we ride the wave—awkwardly, imperfectly, maybe with one arm clutching a battered copy of Wittgenstein and the other dragging a half-trained GPT through a mess of conflicting prompts? Or will we just get hit by it, full force—passive, disengaged, letting it carry us along in whatever direction it’s most statistically likely to go?

This isn’t a call to resist the machine. It’s a call to stay awake inside it. To remember that knowledge has never just been about data. It’s about contact. Subjectivity. Stakes. The willingness to risk being wrong because something mattered enough to say. And maybe that’s what we’re left with now—not the illusion of mastery, but the messy, beautiful burden of choice.

Because even if we no longer own the library, we can still decide which shelf to start with. Even if the system can generate every answer, it can’t tell you which one is worth caring about. And maybe, in the end, the most human kind of knowledge is the kind that says, I know this isn’t perfect—but I’m here anyway, trying to mean something.

(Assuming, of course, I’m not interrupted by a calendar notification, a sudden wave of imposter syndrome, or the faint hum of the fridge reminding me that entropy always wins.)

The end.

Make the first step and enjoy the journey!

- No limited trial period

- No upfront payment

- No automatic renewal

- No hidden costs